Microsoft will ID its AI art with a hidden watermark

Image: Bing Image Creator

Image: Bing Image CreatorArtists concerned about others passing off AI-generated art as their own will now be able to breathe a bit easier: Microsoft has agreed to sign all AI art that its apps generate with a cryptographic watermark indicating it was made with an algorithm.

The Coalition for Content Provenance and Authority (C2PA) began work in 2021 to develop an open standard for indicating the origin of digital images, and whether they were authentic or AI-generated. The issue was thrust into the spotlight in March, when AI-generated images of the Pope in a stylish puffy jacket went viral, and AI-art generator Midjourney clamped down to prevent even more.

Microsoft, a founding member of the C2PA, will announce at its Microsoft Build developer conference this week that it will cryptographically sign AI-generated images from Bing Image Creator and Microsoft Designer.

Images made with Bing Image Creator already include a small “b” for the Bing logo in the bottom right-hand corner. Images generated by Microsoft Designer’s visual design tool do not, however. Now, Microsoft will sign images generated by both tools according to the C2PA standard, with the origin clearly disclosed in the image’s metadata.

One of the best available AI image generators, Adobe Firefly, already does this with a feature called Content Credentials—although it’s only supported within Adobe’s apps and doesn’t carry over to the metadata that’s visible in other apps such as Windows 11 Photos.

Mark Hachman / IDG

Mark Hachman / IDG

Mark Hachman / IDG

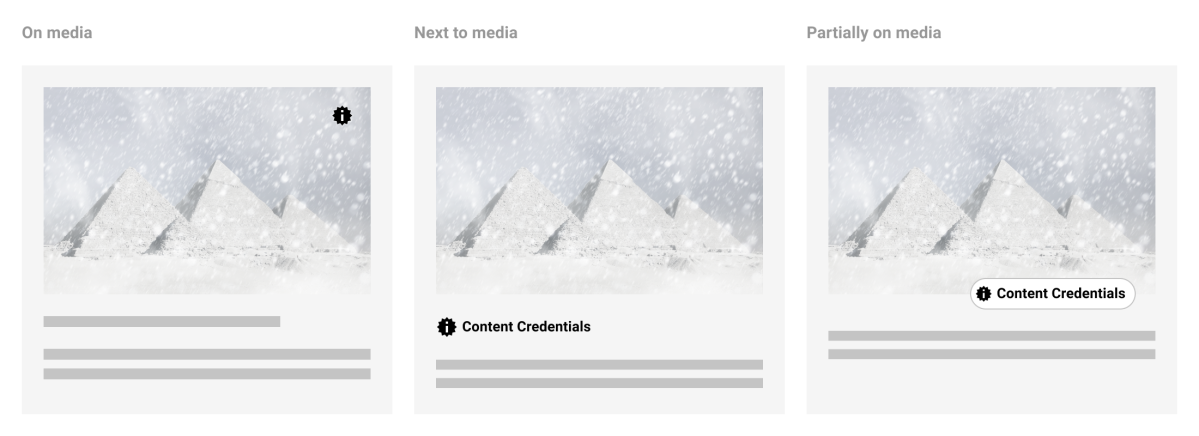

According to the C2PA specification, consumers will see a small indicator either hovering over or next to the generated image, in addition to the metadata.

Interestingly, Microsoft is saying that it will support “major image and video formats” in both tools. Microsoft only supports image creation in both Image Creator and Designer, though companies like Runway have already launched the ability to generate AI video in short clips.

C2PA

C2PA

C2PA

Establishing digital ownership of AI art

Who exactly creates AI art—and thus who owns it, and who can profit off of it—has become a major concern in the creative world. Stock imagery giant Getty has sued Stable Diffusion in both the U.S. and the United Kingdom, arguing that AI art algorithms have no right to train themselves on copyrighted images. The U.S. Copyright Office has taken a middle ground, arguing that AI-generated art is not subject to copyright unless it can be proven that the original AI generation has been substantially modified by a person.

Microsoft is also addressing another angle: AI art as disinformation. At Build, Microsoft is also announcing Azure AI Content Safety, a service that will allow AI to either assist or replace content moderators. The AI assigns a content score to images scanned by the AI, and flags human moderators. The service will launch in June, for $1.50 per 1,000 scanned images, Microsoft said. Watermarking its own AI-generated content, and encouraging others to do so, will simplify the process.

The concern over AI content has become so critical that camera makers Leica and Nikon are building the C2PA standard directly into cameras to authenticate images as real, and not AI-generated, according to Adobe.

Author: Mark Hachman, Senior Editor

As PCWorld’s senior editor, Mark focuses on Microsoft news and chip technology, among other beats. He has formerly written for PCMag, BYTE, Slashdot, eWEEK, and ReadWrite.

Recent stories by Mark Hachman:

Comcast’s new NOW prepaid Internet looks surprisingly compellingUdio’s AI music is my new obsessionBroadband ‘nutrition labels’ kick in, revealing hidden fees for ISPs