Meta's Make-A-Video creates AI animated GIFs

Image: Meta

Image: MetaTo date, the term “AI art” has meant “static images.” No longer. Meta is showing off Make-A-Video, where the company is combining AI art and interpolation to create short, looping video GIFs.

Make-A-Video.studio isn’t available to the public, yet. Instead, it’s being shown as what Meta itself can do with the technology. And yes, while this is technically video—in the sense that there’s more than a few frames of AI art strung together—it’s still probably closer to a traditional GIF than anything else.

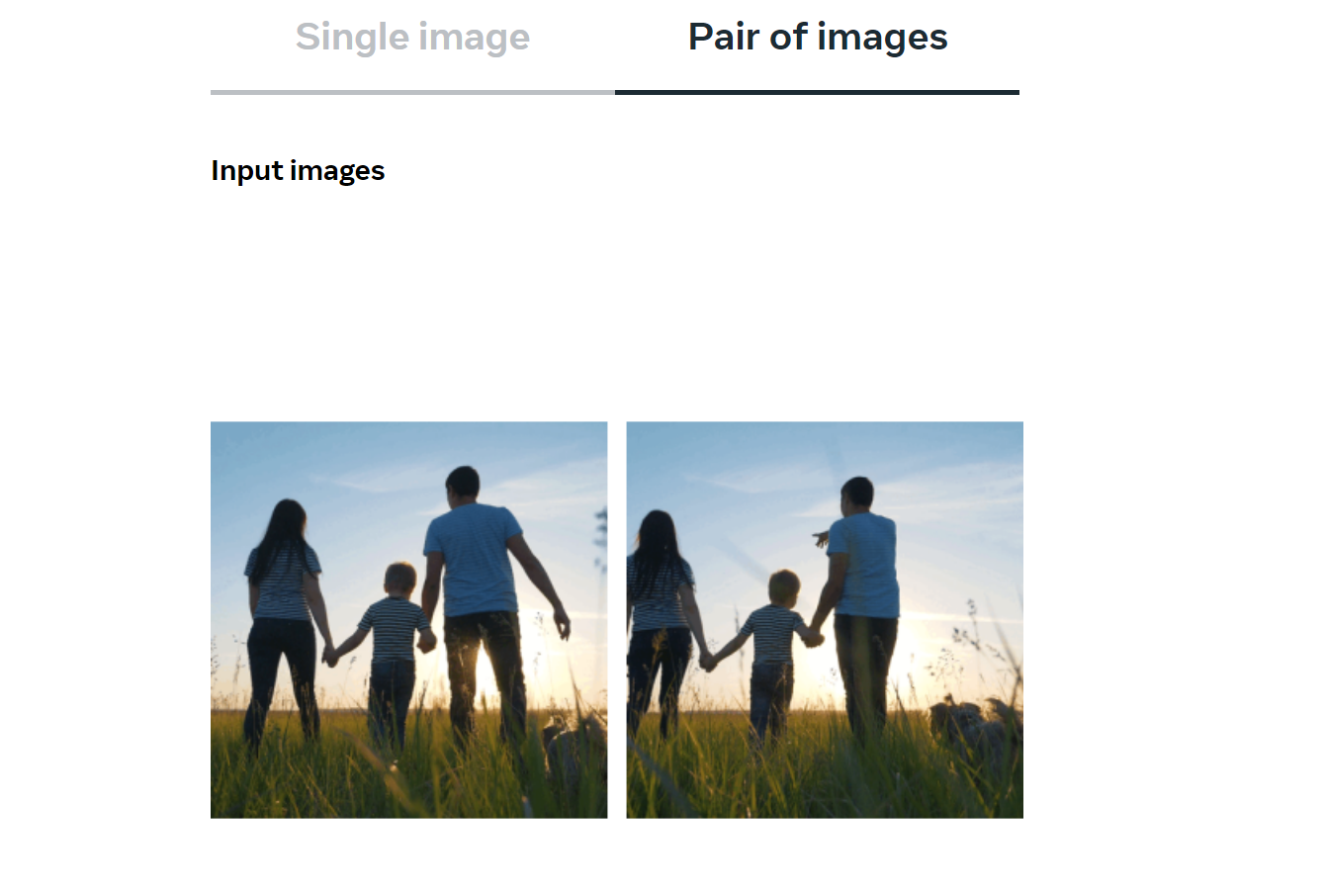

No matter. What Make-A-Video accomplishes is three-fold, given the demonstration on Meta’s site. First, the technology can take two related images—whether they are of a water droplet in flight, or photos of a horse in a full gallop—and create the intervening frames. More impressively, Make-A-Video appears to be able to take a still image and apply motion to it in an intelligent fashion, taking a still image of a boat, for example, and creating a short video of it moving across the waves.

Finally, Make-A-Video can put it all together. From a prompt, “a teddy bear painting a portrait,” Meta showed off a small GIF of an animated teddy bear painting itself. That shows not only the ability to create AI art, but also to infer action from it, as the company’s research paper indicates.

Meta

Meta

Meta

“Make-A-Video research builds on the recent progress made in text-to-image generation technology built to enable text-to-video generation,” Meta explains. “The system uses images with descriptions to learn what the world looks like and how it is often described. It also uses unlabeled videos to learn how the world moves. With this data, Make-A-Video lets you bring your imagination to life by generating whimsical, one-of-a-kind videos with just a few words or lines of text.”

That probably means that Meta is training the algorithm on real video that it’s captured. What isn’t clear is how that video is being inputted. Facebook’s research paper on the subject doesn’t indicate how video could be sourced in the future, and one has to wonder whether anonymized video captured from Facebook could be used as the seed for future art.

This isn’t entirely new, at least conceptually. Animations like VQGAN+clip Turbo can take a text prompt and turn it into an animated video, but Meta’s work appears more sophisticated. It’s hard to say, though, until the model is released for an audience to play with.

Nevertheless, this takes AI art into another dimension: that of motion. How long will it be before Midjourney and Stable Diffusion will do the same on your PC?

Author: Mark Hachman, Senior Editor

As PCWorld’s senior editor, Mark focuses on Microsoft news and chip technology, among other beats. He has formerly written for PCMag, BYTE, Slashdot, eWEEK, and ReadWrite.

Recent stories by Mark Hachman:

Arc’s new browser for Windows is too twee for meThe new Meta.ai website can draw amazing AI art instantlyWhy pay? One of Photoshop’s best features is free in Windows