AI Tom Hanks didn't offer me a job, but it sure sounds like he did

Image: Mark Hachman / IDG via Dreamstudio.ai

Image: Mark Hachman / IDG via Dreamstudio.aiTom Hanks didn’t just call me to pitch me a part, but it sure sounds like it.

Ever since PCWorld began covering the rise of various AI applications like AI art, I’ve been poking around in the code repositories in GitHub and links within Reddit, where people will post tweaks to their own AI models for various approaches.

Some of these models actually end up on commercial sites, which either roll their own algorithms or adapt others that have published as open source. A great example of an existing AI audio site is Uberduck.ai, which offers literally hundreds of preprogrammed models. Enter the text in the text field and you can have a virtual Elon Musk, Bill Gates, Peggy Hill, Daffy Duck, Alex Trebek, Beavis, The Joker, or even Siri read out your pre-programmed lines.

We uploaded a fake Bill Clinton praising PCWorld last year and the model already sounds pretty good.

Training an AI to reproduce speech involves uploading clear voice samples. The AI “learns” how the speaker combines sounds with the goal into learning those relationships, perfecting them, and imitating the results. If you’re familiar with the excellent 1992 thriller Sneakers (with an all-star cast of Robert Redford, Sidney Poitier, and Ben Kingsley, among others), then you know about the scene in which the characters need to “crack” a biometric voice password by recording a voice sample of the target’s voice. This is almost the exact same thing.

Normally, assembling a good voice model can take quite a bit of training, with lengthy samples to indicate how a particular person speaks. In the past few days, however, something new has emerged: Microsoft Vall-E, a research paper (with live examples) of a synthesized voice that requires just a few seconds of source audio to generate a fully programmable voice.

Naturally, AI researchers and other AI groupies wanted to know if the Vall-E model had been released to the public yet. The answer is no, though you can play with another model if you wish, called Tortoise. (The author notes that it’s called Tortoise because it’s slow, which it is, but it works.)

Train your own AI voice with Tortoise

What makes Tortoise interesting is that you can train the model on whatever voice you choose simply by uploading a few audio clips. The Tortoise GitHub page notes that you should have a few clips of about a dozen seconds or so. You’ll need to save them as a .WAV file with a specific quality.

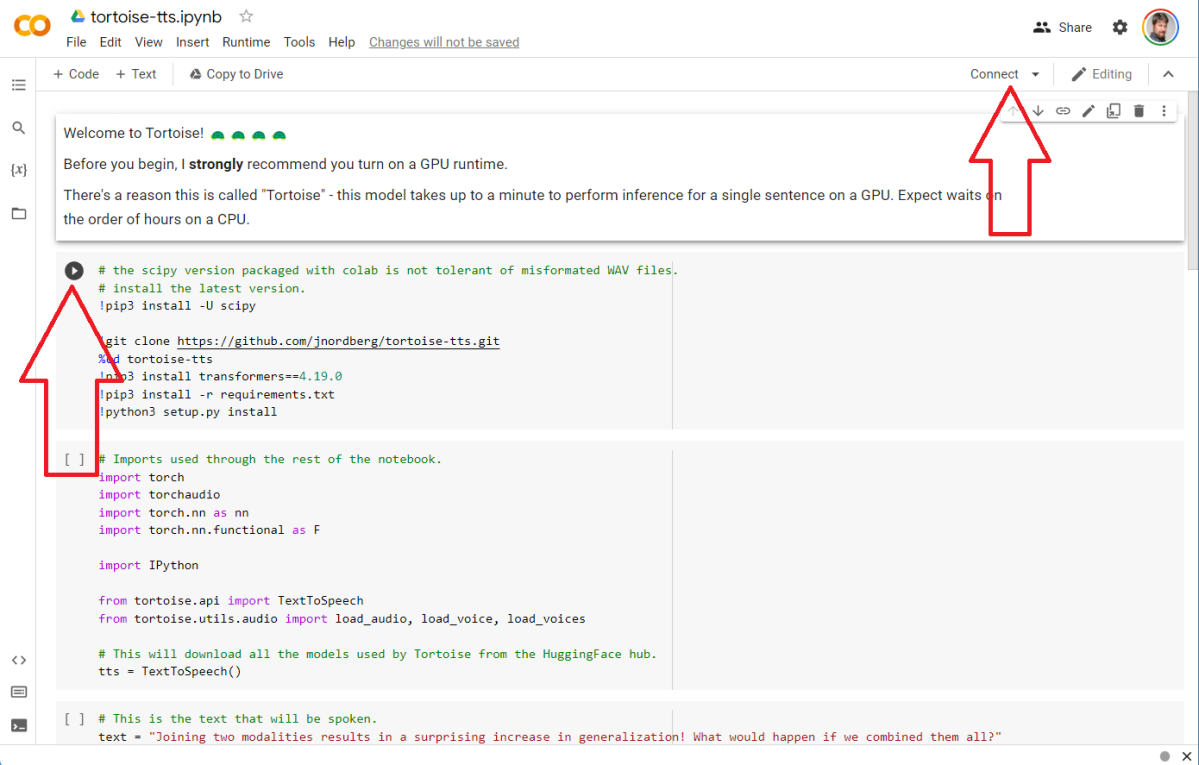

How does it all work? Through a public utility that you might not be aware of: Google Colab. Essentially, Collab is a cloud service that Google provides that allows access to a Python server. The code that you (or someone else) writes can be stored as a notebook, which can be shared with users who have a generic Google account. The Tortoise shared resource is here.

The interface looks intimidating, but it’s not that bad. You’ll need to be logged in as a Google user and then you’ll need to click “Connect” in the upper-right-hand corner. A word of warning. While this Colab doesn’t download anything to your Google Drive, other Colabs might. (The audio files this generates, though, are stored in the browser but can be downloaded to your PC.) Be aware that you’re running code that someone else has written. You may receive error messages either because of bad inputs or because Google has a hiccup on the back end such as not having an available GPU. It’s all a bit experimental.

Mark Hachman / IDG

Mark Hachman / IDG

Mark Hachman / IDG

Each block of code has a small “play” icon that appears if you hover your mouse over it. You’ll need to click “play” on each block of code to run it, waiting for each block to execute before you run the next.

While we’re not going to step through detailed instructions on all of the features, just be aware that the red text is user modifiable, such as the suggested text that you want the model to speak. About seven blocks down, you’ll have the option of training the model. You’ll need to name the model, then upload the audio files. When that completes, select the new audio model in the fourth block, run the code, then configure the text in the third block. Run that code block.

If everything goes as planned, you’ll have a small audio output of your sample voice. Does it work? Well, I did a quick-and-dirty voice model of my colleague Gordon Mah Ung, whose work appears on our The Full Nerd podcast as well as various videos. I uploaded a several-minute sample rather than the short snippets, just to see if it would work.

The result? Well, it sounds lifelike, but not like Gordon at all. He’s certainly safe from digital impersonation for now. (This is not an endorsement of any fast-food chain, either.)

But an existing model that the Tortoise author trained on actor Tom Hanks sounds pretty good. This is not Tom Hanks speaking here! Tom also did not offer me a job, but it was enough to fool at least one of my friends.

The conclusion? It’s a little scary: the age of believing what we hear (and soon see) is ending. Or it already has.

Author: Mark Hachman, Senior Editor

As PCWorld’s senior editor, Mark focuses on Microsoft news and chip technology, among other beats. He has formerly written for PCMag, BYTE, Slashdot, eWEEK, and ReadWrite.

Recent stories by Mark Hachman:

Arc’s new browser for Windows is too twee for meThe new Meta.ai website can draw amazing AI art instantlyWhy pay? One of Photoshop’s best features is free in Windows