'AI PCs' are everywhere at CES. What does it really mean for you?

Image: Getty

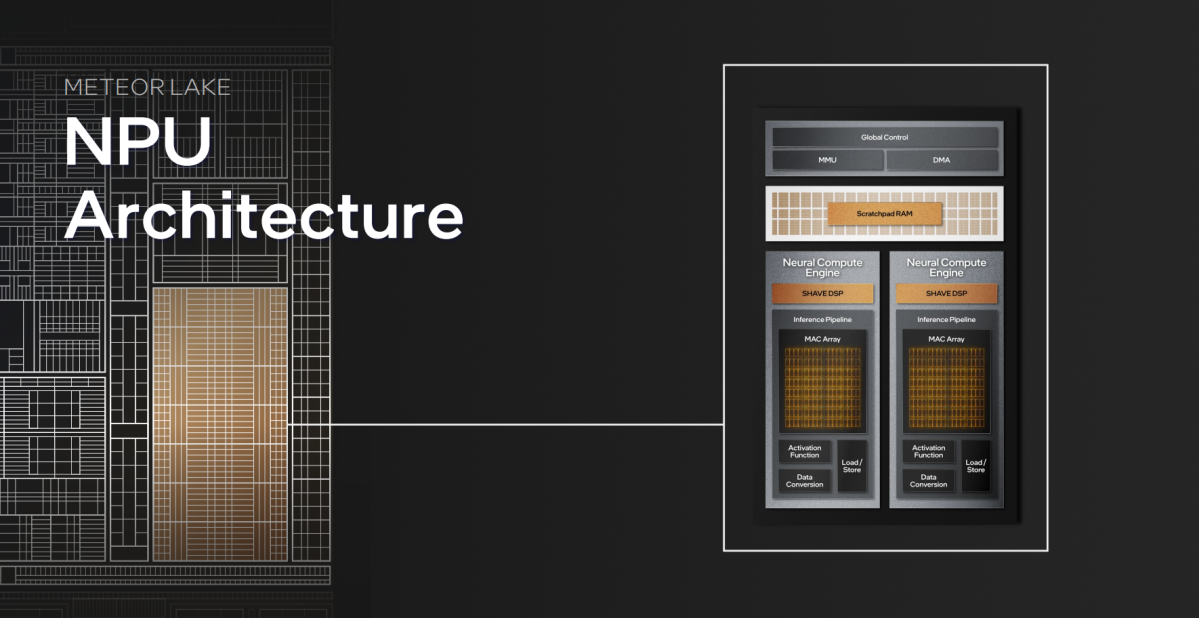

Image: GettyTo the surprise of exactly nobody who has been following the PC industry the last six months, “AI PCs” were everywhere at CES 2024, powered by new chips like Intel’s Core Ultra and AMD’s Ryzen 8000 with dedicated “Neural Processor Units” (NPUs). These help accelerate AI tasks locally, rather than reaching out to cloud servers (like ChatGPT and Microsoft Copilot). But what does that actually mean for you, an everyday computer user?

That’s the question I hoped to answer as I wandered the show floor, visiting PC makers of all shapes and sizes. Most early implementations of local, NPU-processed software has focused heavily on creator workloads — improving performance in tools like Adobe Photoshop, DaVinci Resolve, and Audacity. But how can local AI help Joe Schmoe?

After scouring the show, I can say that NPU improvements aren’t especially compelling yet in these early days — though if you have an Nvidia GPU, you’ve already got powerful, practical AI at your fingertips.

But first, NPU-based AI.

Local AI takes baby steps

The HP Omen Transcend.

The HP Omen Transcend.

IDG / Matthew Smith

The HP Omen Transcend.

IDG / Matthew Smith

IDG / Matthew Smith

Frankly, NPU-driven AI isn’t compelling yet, though it can pull off some cool parlor tricks.

HP’s new Omen Transcend 14 showed off how the NPU can be used to offload video-streaming tasks while the GPU ran Cyberpunk 2077 — nifty, to be sure, but once again focused on creators. Acer’s Swift laptops thankfully take a more practical angle. They integrate Temporal Noise Reduction and what Acer calls PurifiedView and PurifiedVoice 2.0 for AI-filtered audio and video, with a three-mic array, and there are more AI capabilities promised to come later this year.

MSI’s stab at local AI also tackles cleaning up Zoom and Teams calls. A Core Ultra laptop demo showed Windows Studio Effects tapping the NPU to automatically blur the background of a video call. Next to it, a laptop set up with Nvidia’s awesome AI-powered Broadcast software was doing the same. The Core Ultra laptop used dramatically less power than the Nvidia notebook, since it didn’t need to fire up a discrete GPU to process the background blur, shunting the task to the low-power NPU instead. So that’s cool — and unlike RTX Broadcast, it doesn’t require you to have a GeForce graphics card installed.

Just as practically, MSI’s new AI engine intelligently detects what you’re doing on your laptop and dynamically changes the battery profile, fan curves, and display settings as needed for the task. Play a game and everything gets cranked; start slinging Word docs and everything ramps down. It’s cool, but existing laptops already do this to some degree.

MSI also showed off a nifty AI Artist app, running on the popular Stable Diffusion local generative AI art framework, that lets you create images from text prompts, create suitable text prompts from images you plug in, and create new images from images you select. Windows Copilot and other generative art services can already do this, of course, but AI Artist performs the task locally and is more versatile than simply slapping words into a box to see what pictures it can create.

Lenovo’s vision for NPU-driven AI seemed the most compelling. Dubbed “AI Now,” this text input-based suite of features seems genuinely useful. Yes, you can use it to generate images, natch — but you can also ask it to automatically set those images as your wallpaper.

More helpfully, typing prompts like “My PC config” instantly brings up hardware information about your PC, removing the need to dive into arcane Windows sub-menus. Asking for “eye care mode” enables the system’s low-light filter. Asking it to optimize battery life adjusts the power profile depending on your usage, similar to MSI’s AI engine.

Those are useful, albeit somewhat niche, but the feature that impressed me most was Lenovo’s Knowledge Base feature. You can train AI Now to sift through documents and files stored in a local “Knowledge Base” folder, and quickly generate reports, synopses, and summaries based on only the files within, never touching the cloud. If you stash all your work files in it, you could, for instance, ask for a recap of all progress on a given project over the past month, and it will quickly generate that using the information stored in your documents, spreadsheets, et cetera. Now this seems truly useful, mimicking cloud AI-based Office Copilot features that Microsoft charges businesses an arm for a leg for.

But. AI Now is currently in the experimental stage, and when it launches later this year, it will come to China first. What’s more, the demo I saw wasn’t actually running on the NPU yet — instead, Lenovo was using traditional CPU grunt for the tasks. Sigh.

And that’s my core takeaway from the show. NPUs are only just starting to appear in computers, and the software that taps into them ranges from gimmicky to “way too early” — it’ll take time for the rise of the so-called “AI PC” to develop in any practical sense.

Unless you already have an Nvidia graphics card installed, that is.

The AI PC is already here with GeForce

Thiago Trevisan/IDG

Thiago Trevisan/IDG

Thiago Trevisan/IDG

Visiting Nvidia’s suite after the laptop makers drove home that the AI PC is, in fact, already here if you’re a GeForce owner.

That shouldn’t come as a surprise. Nvidia is at the forefront of AI development as has been driving the segment for years. Features like DLSS, RTX Video Super Resolution, and Nvidia Broadcast are all tangible, practical real-world AI applications that users love and use every day. There’s a reason Nvidia can charge a premium for its graphics cards.

The company was showing off some cool cloud-based AI tools — its ACE character engine for game NPCs can now hold full-blown generative chats about anything, in a variety of languages, and its iconic Jinn character did not appreciate when I told him his ramen sucked — but I want to focus on the local AI tools, since that’s the point of this article.

A lineup of creator-focused Nvidia Studio laptops were on-hand showing just how powerful GeForce’s dedicated ray tracing and AI tensor cores can be at accelerating creation tasks, such as real-time image rendering or removing items from photos. But again, while that’s amazing for creators, it’s of little practical benefit to everyday consumers.

Two other AI demos are.

One, a supplement to the existing RTX Video Super Resolution feature that uses GeForce’s AI tensor cores to upscale and beautify low-resolution videos, focuses on using AI to translate standard dynamic range video into high dynamic range (HDR). Dubbed RTX Video HDR, it looked truly transformative in demos I witnessed. The overly crushed darks in a Game of Thrones scene caused by video compression were cleared up and brightened using the feature, delivering a stunning increase in image quality. It was a similar story in an underground scene from another still, where the back of a subway was dark beyond comprehension, but RTX Video HDR let you pick out a tunnel, garbage cans, and other hidden aspects previous lost to the gloom. It looks great and should be arriving in a GeForce driver later this month.

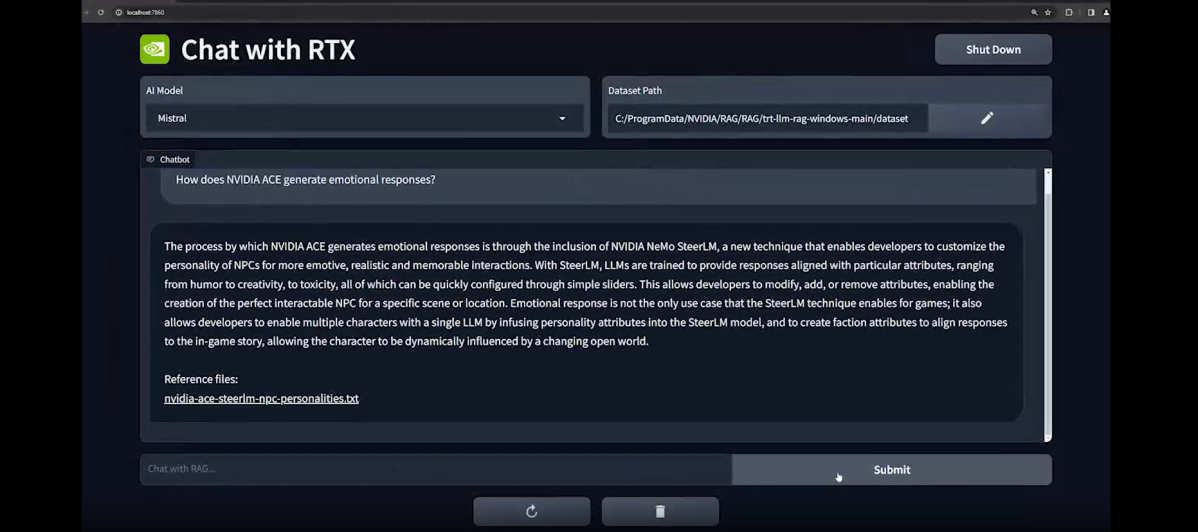

Chat with RTX was able to provide an accurate description of Nvidia’s new ACE features after being pointed to a local file.

Chat with RTX was able to provide an accurate description of Nvidia’s new ACE features after being pointed to a local file.

Nvidia

Chat with RTX was able to provide an accurate description of Nvidia’s new ACE features after being pointed to a local file.

Nvidia

Nvidia

Then there was Chat with RTX, which truly impressed me. Most AI chatbots bump your requests out to the cloud, where they’re processed on company servers and then sent back to you. Not Chat with RTX, an upcoming application that runs on either the Mistal or Llama LLMs. The key thing here is it can also be trained on your local text, PDF, doc, and XML files, so you can ask the application questions about your specific information and needs — and it all runs locally. You can ask it questions like, “Where did Sarah say I should go to dinner next time I’m in Vegas?” and have the answer pop right up from your files.

Better yet, since it runs locally, the answers in our demos appeared much faster than the answers generated by cloud-based LLMs like ChatGPT, and you can also point Chat with RTX to specific YouTube videos to ask questions about its content, or to receive a general summary. Chat with RTX scans the YouTube-provided transcript for that particular video and your answer appears in seconds. It is rad.

Chat with RTX should also be out in demo form too, and Nvidia is releasing its backbones so that developers can create new programs that tap into it.

Compared to the AI demos I saw for NPU applications, the features on display at Nvidia’s booth felt both more practical and much more powerful. Nvidia reps told me that was a goal for the company; to show that AI PCs already exist, and can drive genuinely useful experiences — if you have a GeForce GPU, of course.

Bottom line

Intel

Intel

Intel

And that’s really my overarching takeaway on the AI PC from CES 2024. Will AI amount to more than former buzzwords like “blockchain” and “the metaverse,” which fizzled out in spectacular fashion? I think so. Companies like Nvidia are already using it to amazing effect. But NPUs are practically still in diapers and learning to talk. There’s no overly compelling practical feature that taps into them yet unless you’re a content creator.

Don’t get me wrong: The future looks potentially bright for local NPUs as a whole — the entire computing industry is willing it into being before our very eyes — but if you want a true AI PC right now, one with tangible, practical benefits for everyday computer users, you’re better off investing in a tried-and-true Nvidia RTX GPU than a chip with a newfangled NPU.

Author: Brad Chacos, Executive editor

Brad Chacos spends his days digging through desktop PCs and tweeting too much. He specializes in graphics cards and gaming, but covers everything from security to Windows tips and all manner of PC hardware.

Recent stories by Brad Chacos:

How to check your PC’s CPU temperature10 surprisingly practical Raspberry Pi projects anybody can doTiny NUCs get more powerful with Core Ultra 9 and heavy metal