Intel and AMD are building AI into PCs. It doesn't matter yet—but it will

Image: OpenAI.com / Dall-E

Image: OpenAI.com / Dall-EThe rise of consumer-focused artificial intelligence applications (like AI art and ChatGPT) was the most dynamic trend of 2022. But don’t get too excited quite yet — buying new laptops from AMD and Intel with AI functions built in aren’t worth you opening your wallet.

Given the breakneck pace of AI development, though, they may very well be come next year’s CES.

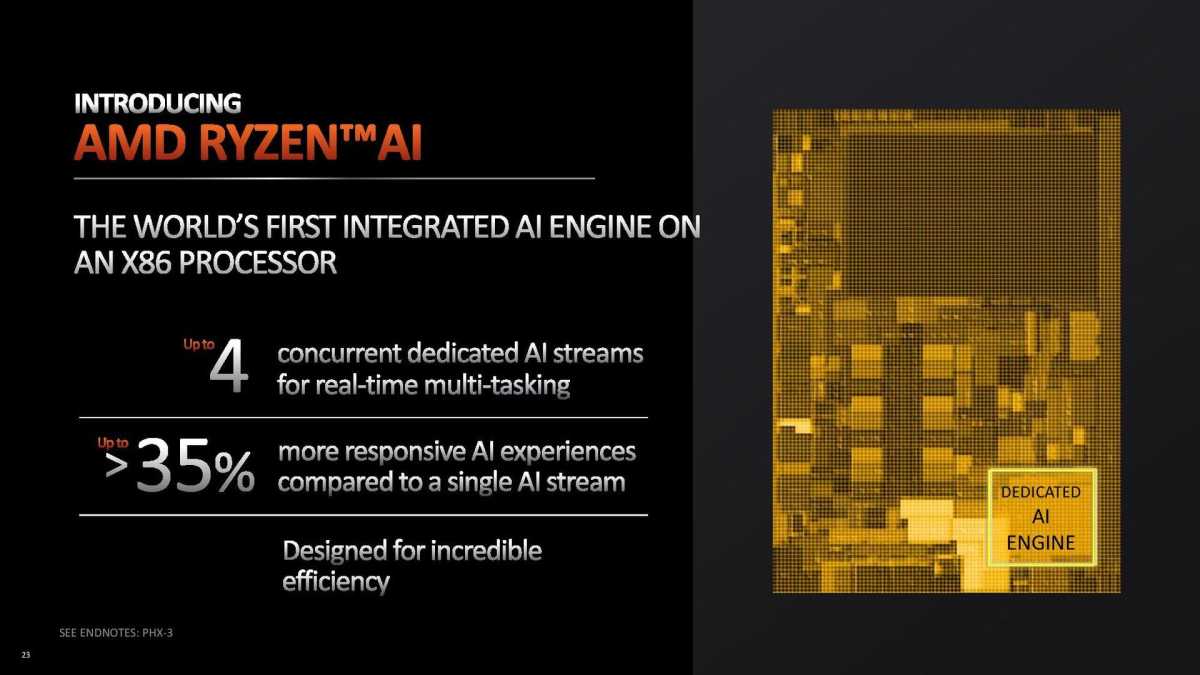

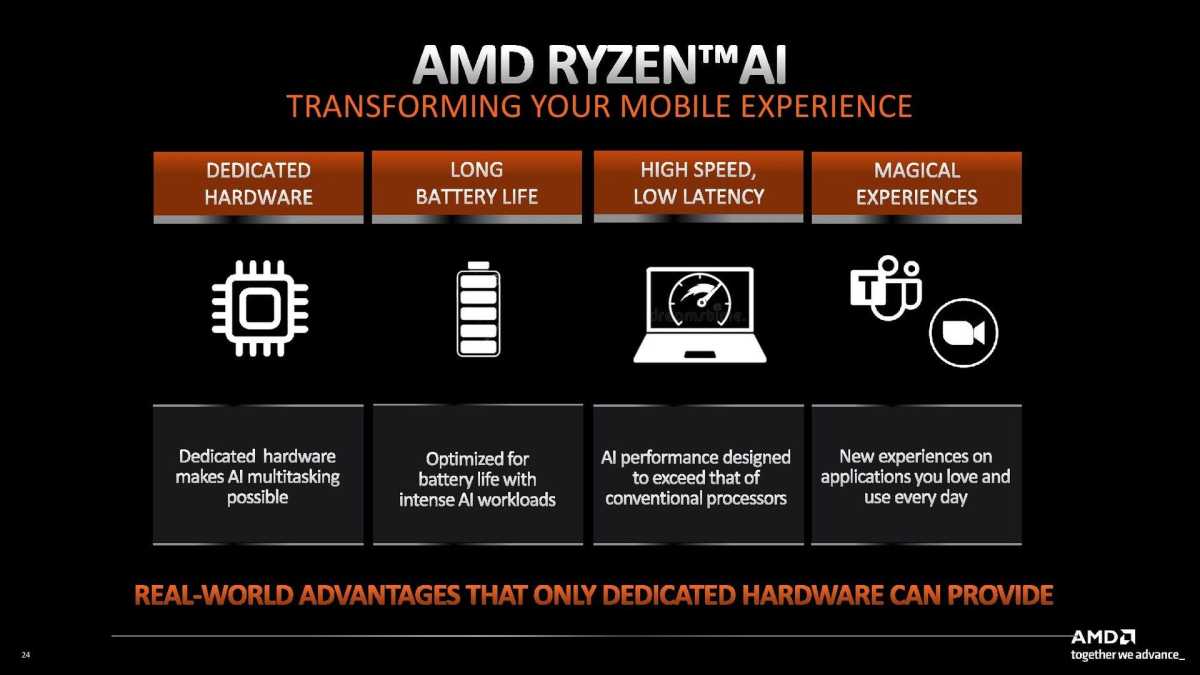

Here’s what’s going on. Deep within the confusing mishmash of processor architectures that make up AMD’s latest mobile Ryzen chips lies XDNA, the new AI hardware architecture that AMD is launching within the Ryzen Mobile 7040 Series as “Ryzen AI.” (Think of XDNA as the AI version of RDNA, the foundation of AMD’s Radeon graphics cores.) Intel has similar plans, though right now it’s using a discrete Movidius AI card as a placeholder until its Meteor Lake chips integrate a “real” AI core. Qualcomm has offered AI technology as part of its Arm-based Snapdragon chips for years, which power most smartphones but are a niche player in Windows PCs.

Right now, there is only one reason to buy an AI-powered PC: Windows Studio Effects, the collection of webcam technologies built into the Snapdragon-wielding Microsoft Surface Pro 9 5G. Windows chief Panos Panay joined AMD CEO Lisa Su onstage to talk up Studio Effects during XDNA’s introduction. The technology includes a number of different features — an integrated background blur, noise filtering to cut out background sounds, Eye Contact, and automatic framing. All use AI in some ways; Eye Contact, for example, uses the webcam to notice where your eyes are looking and then applies AI to make them look as if you’re constantly making eye contact with the camera.

AMD

AMD

AMD

That’s interesting, though not ground-breaking. Still, Microsoft has smartly used AI to improve productivity on your local device in ways that make you look more alert and productive. Without it, you simply have to put more effort in: pay attention, make sure you’re centered on camera, and conduct meetings from a quiet location.

But AI is still feeling itself out on a number of different fronts.

First, there’s the hardware. Remember that PC sound accelerators started out within the CPU, migrated out into a standalone chip/card (RIP, SoundBlaster) and then back into the CPU again. GPUs traced a similar path, but they’ve become so valuable that they’ll remain discrete components seemingly forever. With AI, no one’s quite sure: AI art applications like Stable Diffusion were designed for PC GPUs, but they require a substantial amount of video RAM to even function. It’s far easier for users to ask services like Midjourney or Microsoft’s stunning Designer app to compute those AI functions in the cloud, even if you have to “pay” via a subscription or advertising.

There’s also the very reasonable question of what AI apps and services we’ll see in the years to come. AI art exploded during the last six months to not only include art but AI-powered chatbots like ChatGPT that some think may replace or supplement search engines like Bing. Companies quietly toiled away on AI server hardware and learning models throughout the past years, and the fruits of those labors are being reaped now. Training massive AI engines can literally take months, but models like ChatGPT can generate text right now that could receive passing grades in high school. What will the next generation hold? We all remember what the early days of the PC were like, with Pentium chips pushing the PC into the mainstream. It very much feels like that time now, even if we can’t know for sure what that future will look like.

AMD

AMD

AMD

It’s probably fair to say, then, that 2022 was the year that AI began emerging into the public consciousness. This year, 2023, feels like the year it all begins shaking out: what services will be viable, what we’ll use, what society will ask for in the future. It seems very likely that software forks of Stable Diffusion and other AI frameworks will be recoded for Ryzen AI and the Movidius hardware, and we’ll have a better understanding of how XDNA compares to RDNA in terms of what it can accomplish.

That’s an exciting future, though it’s still the future. AMD told PCWorld that its XDNA architecture was designed around an FPGA (Field Programmable Gate Array) it obtained from its Xilinx acquisition, a technology which allows the hardware to be quickly reconfigured. FPGAs make sense when a technology isn’t totally understood, and changes and adjustments need to be made.

Subsequent chip revisions in 2024 and 2025 will likely integrate AI into our computing lives: top-to-bottom integration, more dedicated logic. Who knows? We may use AI to “train” digital models of ourselves, so those models can start to serve as AI proxies — scheduling dentist appointments, say, with knowledge of our calendar and our contacts. We could create a video avatar of ourselves as a sort of visual voice mail, if we still used voice mail.

On the other hand, people bet big on the metaverse. The future that a company wants isn’t always what it ends up being.

We’d doubt that you’ll rush out to buy up new AI-powered PC processors. For now, we’d recommend you wait. But we’re also fairly confident that AI will be a part of your PC’s future, whenever that future ends up arriving.

Author: Mark Hachman, Senior Editor

As PCWorld’s senior editor, Mark focuses on Microsoft news and chip technology, among other beats. He has formerly written for PCMag, BYTE, Slashdot, eWEEK, and ReadWrite.

Recent stories by Mark Hachman:

AMD gains big in desktop CPUs versus Intel in first quarter 2024No, Intel isn’t recommending baseline power profiles to fix crashing CPUsApple claims its M4 chip’s AI will obliterate PCs. Nah, not really